Using LLMs to Structure and Visualize Policy Discourse

Aaditya (Sonny) Bhatia

United States Military Academy, West Point, NY

Advisor: Dr. Gita Sukthankar

University of Central Florida, Orlando, FL

Sunday, August 4, 2024

Context

Policy decisions pose a complex, wicked problem 1 2

- Effectiveness determined by solving it; single attempt

- Measuring impact will shift problem

- Public discourse helps shape solutions; crucial for policy-making

Determining public opinion

- Surveys and polls -> Social Media -> Discussion Platforms

- People willing to express freely

- Digital platforms provide a wealth of data

- Unstructured, vast, and complex

Problem Statement

How can LLMs enable us to

- ingest massive streams of unstructured information

- incorporate diverse perspectives, and

- distill them into actionable insights, that

- align with public opinion?

What are the inherent risks associated with the deployment of LLMs?

Related Works

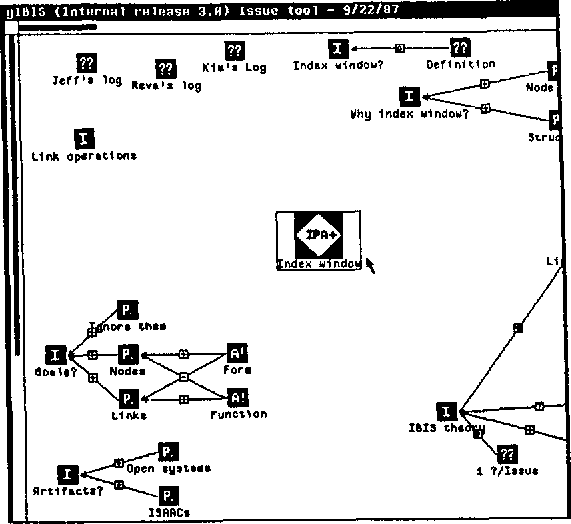

gIBIS

- Networked decision support system (J. Conklin and Begeman 1988)

- Structured conversation using

- Issues

- Positions

- Arguments

- Helped identify underlying assumptions

- Promoted divergent and convergent thinking

- Limited by structure, learning curve, scalability

- Provided the basis for several other tools

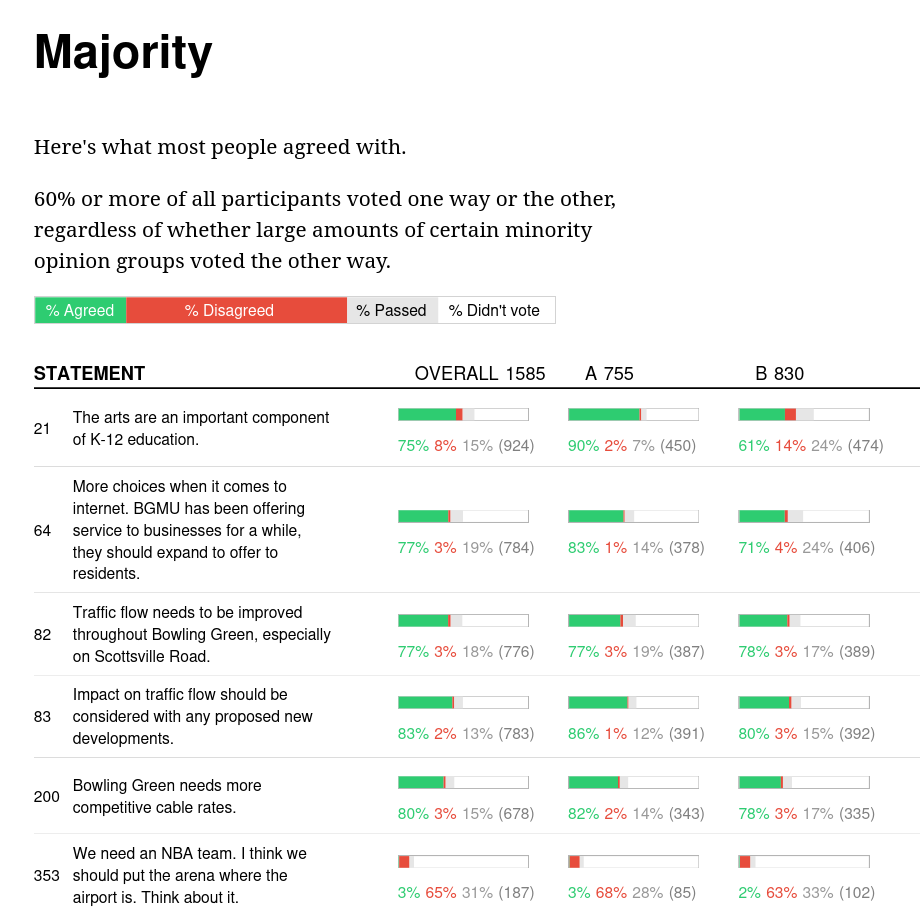

Polis

- “Real-time system for gathering, analyzing and understanding” public opinion (Small 2021)

- Developed as an open source platform for public discourse

- Published several case studies

- Participants post short messages and vote on others

- Polis algorithm ensures exposure to diverse opinions

- \(\vec{comments} \times \vec{votes} =\) opinion matrix

- fed into statistical models

- understand where people agree or disagree

D-Agree Crowd-Scale Discussion

- Automated agent to facilitate online discussion (Ito, Hadfi, and Suzuki 2022)

- IBIS-based discussion representation

- Extracts and analyzes discussion structures from online discussions

- Posts facilitation messages to incentivize participants and grow IBIS tree

- Best results when agent augmented human facilitators (Hadfi and Ito 2022)

- Results

- Use of the agent produced more ideas for any given issue

- Agent had 1.4 times more replies and 6 times shorter response intervals

- Increased user satisfaction and sense of accomplishment

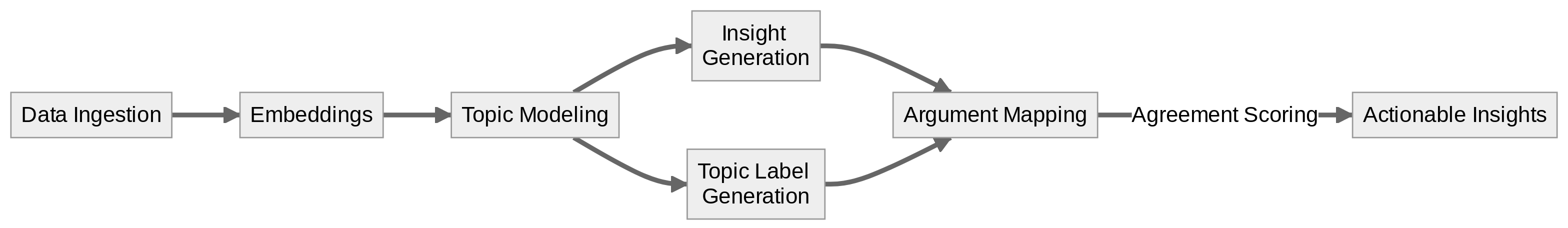

Methodology

Data

- Summary Statistics: conversation topic, number of participants, total comments, total votes

- Comments: author, comment text, moderated, agree votes, disagree votes

- Votes: voter ID, comment ID, timestamp, vote

- Participant-Vote Matrix: participant ID, group ID, n-votes, n-agree, n-disagree, comment ID…

- Stats History: votes, comments, visitors, voters, commenters

| Dataset | Participants | Comments | Accepted |

|---|---|---|---|

| american-assembly.bowling-green | 2031 | 896 | 607 |

| scoop-hivemind.biodiversity | 536 | 314 | 154 |

| scoop-hivemind.taxes | 334 | 148 | 91 |

| scoop-hivemind.affordable-housing | 381 | 165 | 119 |

| scoop-hivemind.freshwater | 117 | 80 | 51 |

| scoop-hivemind.ubi | 234 | 78 | 71 |

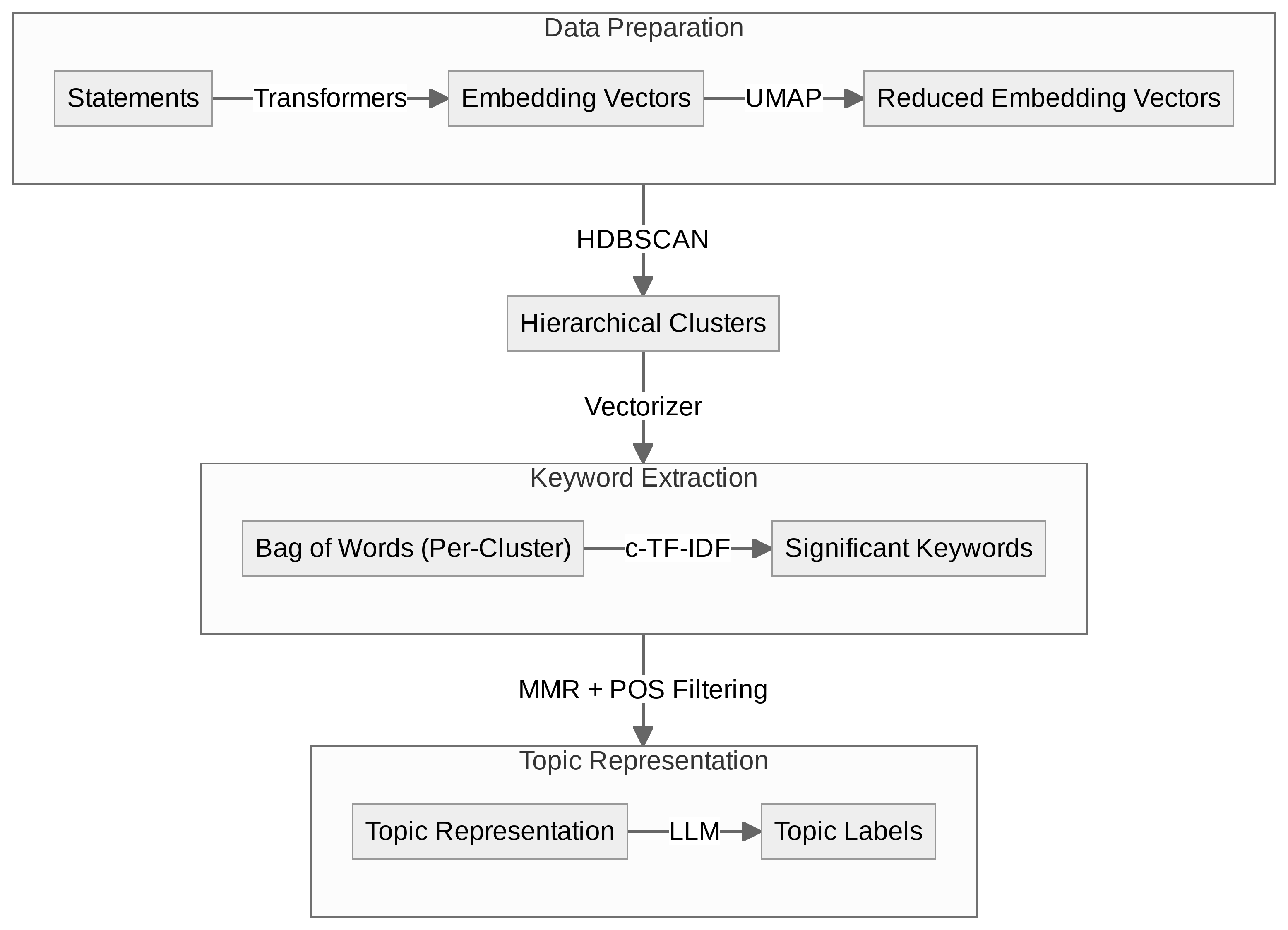

Embeddings

- Calculated at comment level using Sentence Transformers library

- Models considered

- intfloat/e5-mistral-7b-instruct

- WhereIsAI/UAE-Large-V1

- OpenAI/text-embedding-ada-002

- OpenAI/text-embedding-3-large

- Language Model Selection Criteria

- Open weights

- Clustering performance on HuggingFace MTEB

- Memory footprint

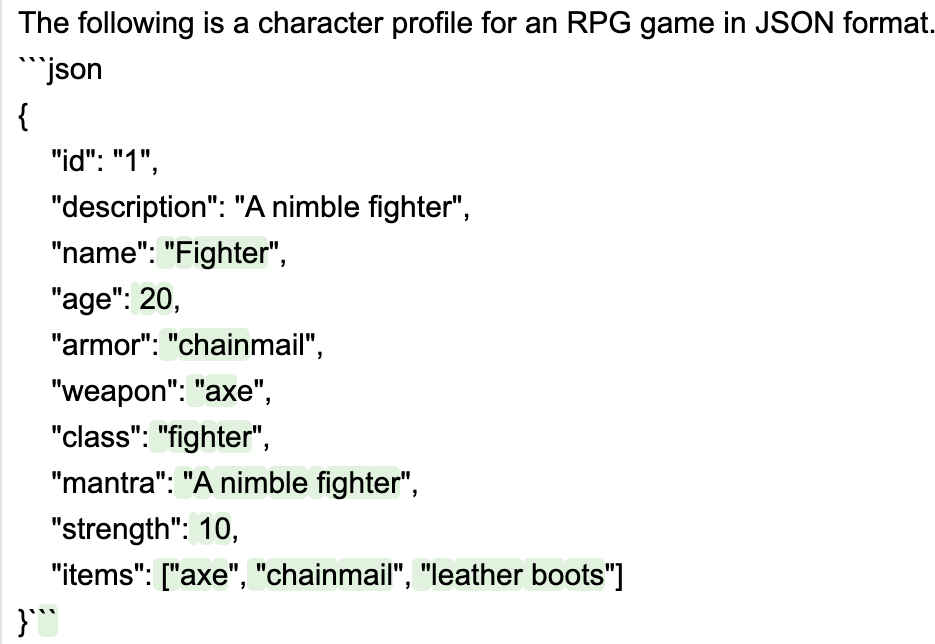

Text Generation

- Models Considered

- Guidance

- Python-based framework developed by Microsoft Research

- Constrain generation using regular expressions, context-free grammars

- Interleave control and generation seamlessly

lm += f"""\

The following is a character profile for an RPG game in JSON format.

```json

{{

"id": "{id}",

"description": "{description}",

"name": "{gen('name', stop='"')}",

"age": {gen('age', regex='[0-9]+', stop=',')},

"armor": "{select(options=['leather', 'chainmail', 'plate'], name='armor')}",

"weapon": "{select(options=valid_weapons, name='weapon')}",

"class": "{gen('class', stop='"')}",

"mantra": "{gen('mantra', stop='"')}",

"strength": {gen('strength', regex='[0-9]+', stop=',')},

"items": ["{gen('item', list_append=True, stop='"')}", "{gen('item', list_append=True, stop='"')}", "{gen('item', list_append=True, stop='"')}"]

}}```"""

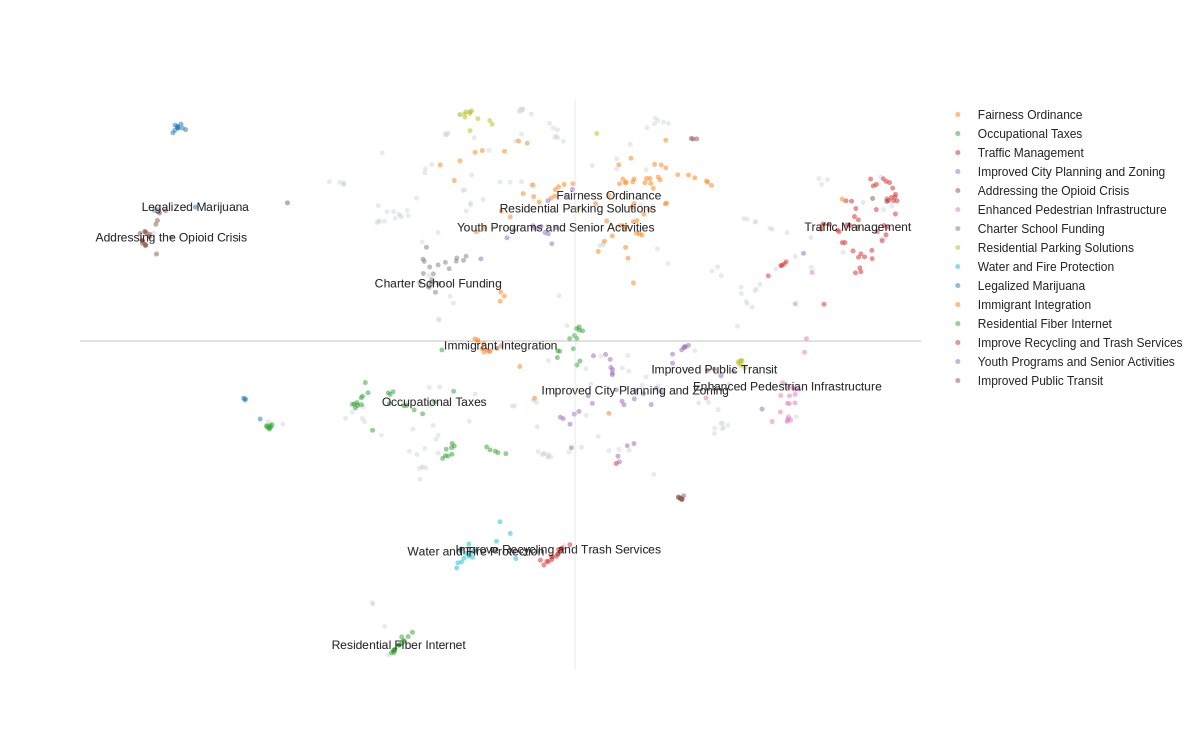

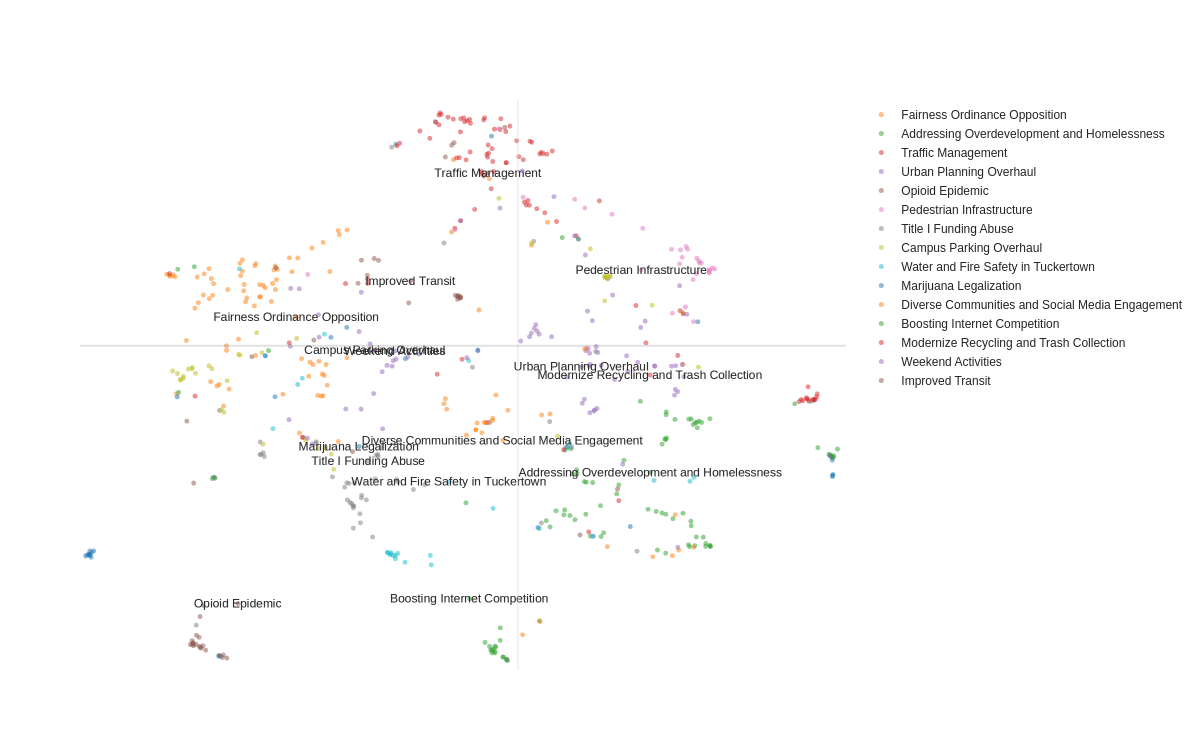

Topic Modeling

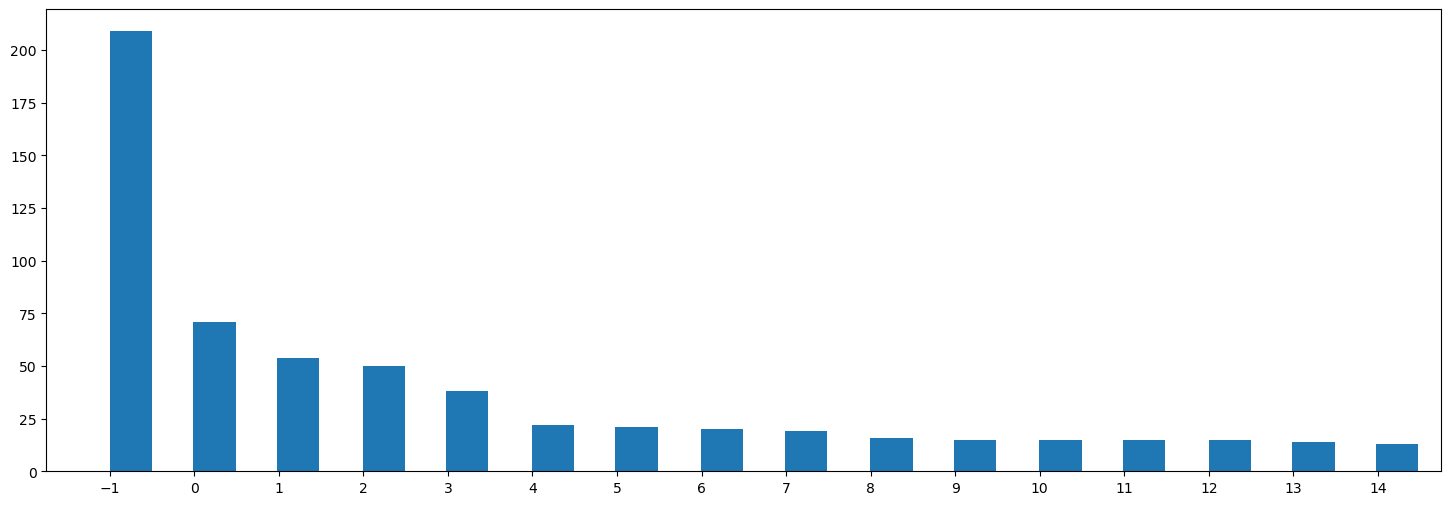

Topic Outlier Assignment

-1 is reserved for outliers that do not initially belong to a cluster.

Statement Distribution

Statement Distribution after outlier reassignment

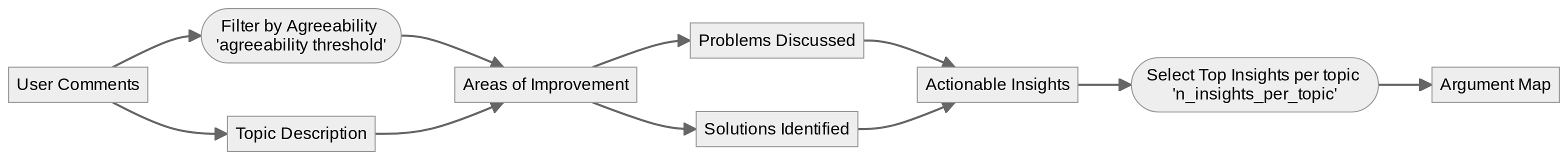

Insight Generation

- Actionable insights that urge for specific actions to address issues

- Problems and solutions proposed by participants

- LLM synthesizes insights from comments within each topic

- Advocate for specific insights urging actions to address issues

- Filter these insights to derive actionable items

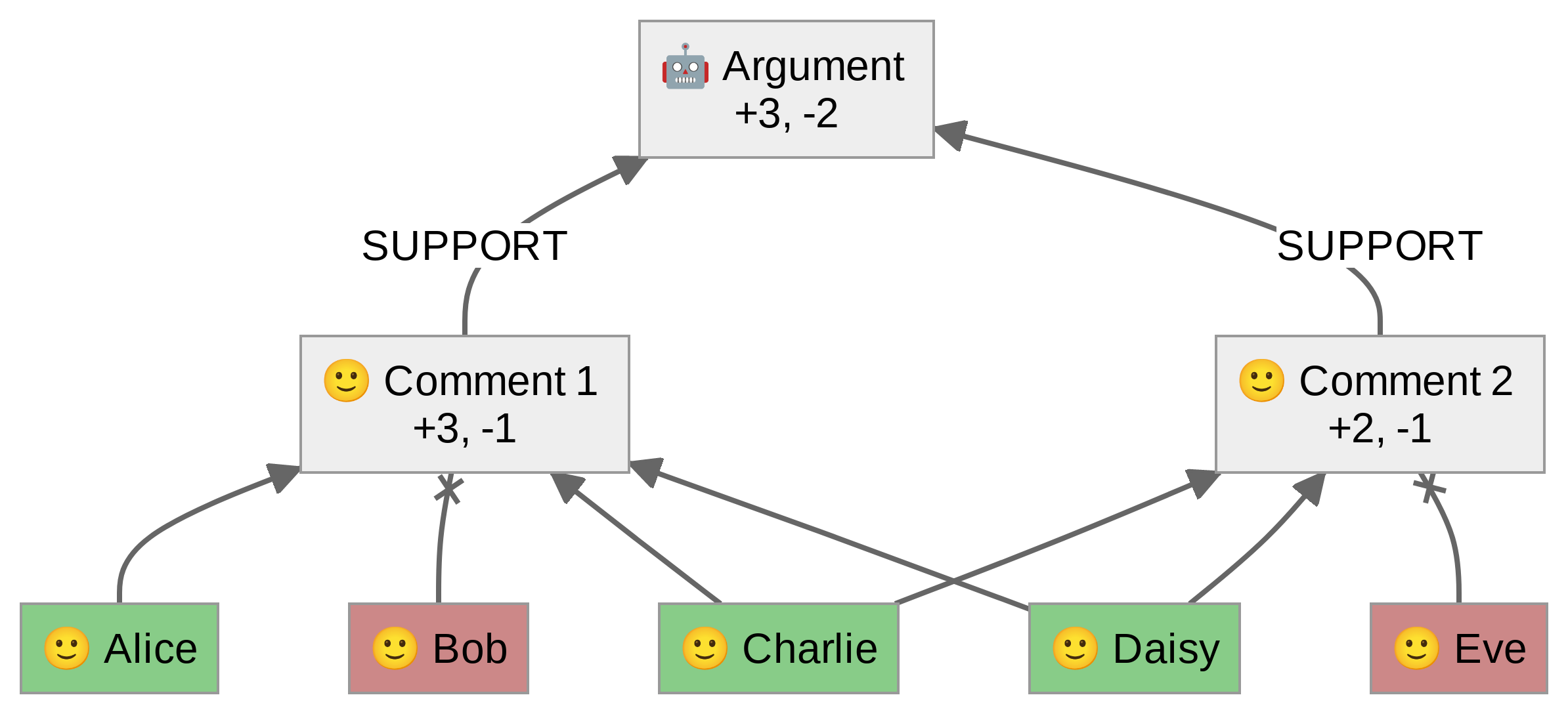

Insight Scoring

- Goal: Quantify acceptance of each generated insight

- Task: Identify comments that support each insight

- Count the individuals that voted positively on supporting comments

- Calculate an “acceptance” factor to indicate the degree of consensus

Potential Biases in Insight Generation

- Some comments, especially those posted earlier, may receive more votes than others

- Use the ratio of agreement votes to total votes

- Certain topics are more popular and have more comments than others

- Generate a balanced number of insights for each topic

- Certain controversial topics are heavily downvoted

- Comments: Filter by quantiles instead of fixed thresholds within each topic

- Insights: Select fixed number of “best insights” from each topic

- Some people vote more than others

- Count the individuals that support an insight over hard vote count

Argument Mapping

- Used Argdown syntax to generate argument maps

- Developed a grammar generator to convert data into Argdown format

- Generated argument maps for each topic to visualize the structure of the debate

===

sourceHighlighter:

removeFrontMatter: true

webComponent:

withoutMaximize: true

height: 500px

===

# Argdown Syntax Example

[Statement]: Argdown is a simple syntax for defining argumentative structures, inspired by Markdown.

+ Writing a list of **pros & cons** in Argdown is as simple as writing a twitter message.

+ But you can also **logically reconstruct** more complex relations.

+ You can export Argdown as a graph and create **argument maps** of whole debates.

- Not a tool for creating visualizations, but for **structuring arguments**.

<Argument>: Argdown is an excellent tool and should be used by the city of Bowling Green, KY.

[Statement]

+> <Argument>Argument Generation and Scoring

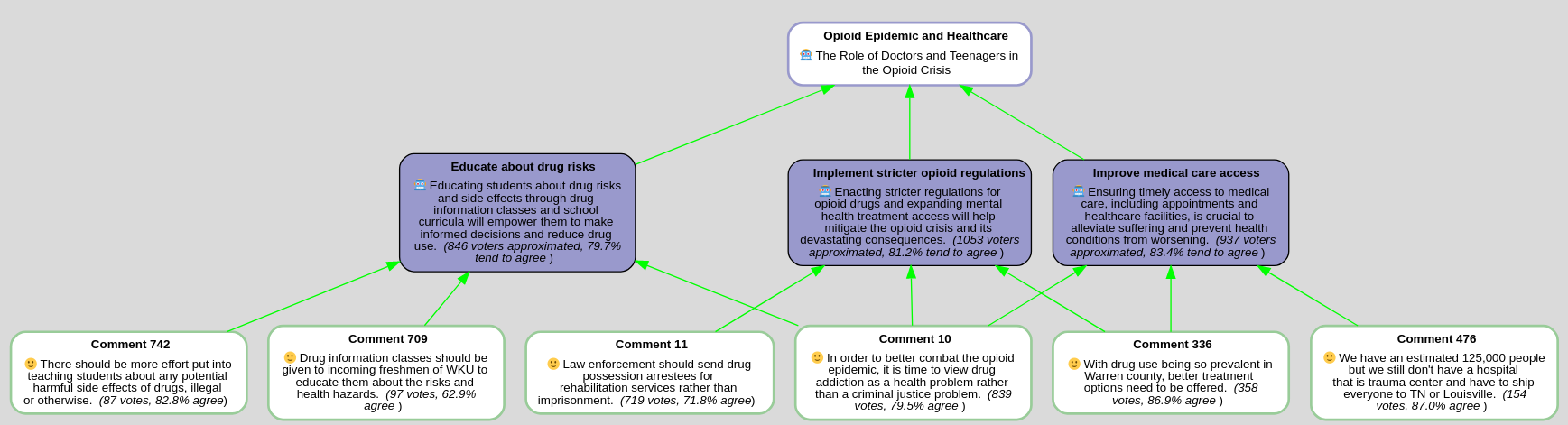

Opioid Epidemic and Healthcare

Argument Generation and Scoring

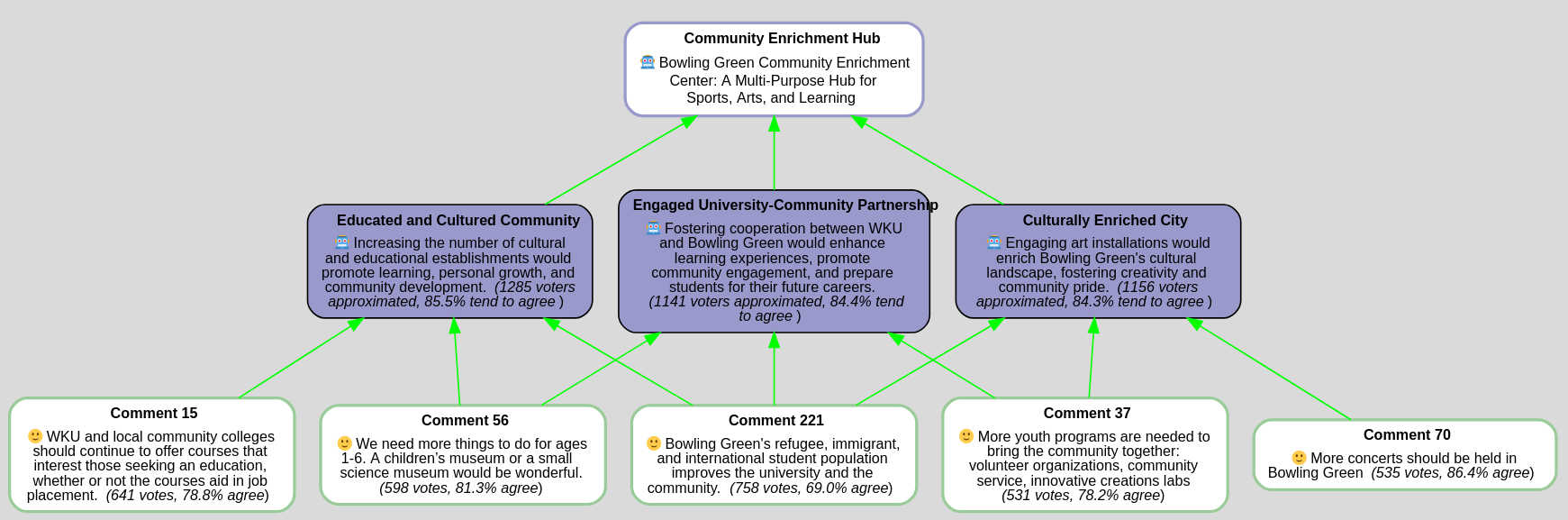

Community Enrichment

Conclusion

- Chaining simple tasks for complex reasoning

- Discovering topics in a large dataset and new generating valuable insights

- Risk of hallucinations and incorrect output

- LLMs’ limitations in processing complex instructions and sentences

- Complex instructions

- Relationship modeling based on double and triple negatives

- Reliability and bias

- Critical need for ethical and inclusive technology deployment

Future Research Directions

- Semantic extraction and reasoning during discourse

- Exploring connections across topics

- Generalizing techniques to platforms like Kialo, Hacker News

Questions?

Thank you!

Conklin, E. Jeffrey. 2006. Dialogue Mapping: Building Shared Understanding of Wicked Problems. Chichester, England ; Hoboken, NJ: Wiley.

Conklin, Jeff, and Michael L. Begeman. 1988. “gIBIS: A Hypertext Tool for Exploratory Policy Discussion.” ACM Transactions on Information Systems 6 (4): 303–31. https://doi.org/10.1145/58566.59297.

Grootendorst, Maarten. 2022. “BERTopic: Neural Topic Modeling with a Class-Based TF-IDF Procedure.” arXiv. http://arxiv.org/abs/2203.05794.

Hadfi, Rafik, and Takayuki Ito. 2022. “Augmented Democratic Deliberation: Can Conversational Agents Boost Deliberation in Social Media?” In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, 1794–98. AAMAS ’22. Richland, SC: International Foundation for Autonomous Agents; Multiagent Systems.

Ito, Takayuki, Rafik Hadfi, and Shota Suzuki. 2022. “An Agent That Facilitates Crowd Discussion.” Group Decision and Negotiation 31 (3): 621–47. https://doi.org/10.1007/s10726-021-09765-8.

Small, Christopher. 2021. “Polis: Scaling Deliberation by Mapping High Dimensional Opinion Spaces.” RECERCA. Revista de Pensament i Anàlisi, July. https://doi.org/10.6035/recerca.5516.