Moderating Democratic Discourse

with Large Language Models

Aaditya (Sonny) Bhatia

United States Military Academy, West Point, NY

Advisor: Dr. Gita Sukthankar

University of Central Florida, Orlando, FL

Wednesday, September 18, 2024

Introduction

- Democracy ➜ policy decisions ➜ discourse

- Policy decisions are wicked problems 1 that require discourse!

- Effectiveness determined by solving it; single attempt

- Measuring impact will shift problem

- Wisdom of the crowd helps generate and evaluate solutions!

- Online deliberation works well in small groups

- Does not scale easily

- Requires human facilitation

Problem Statement

- Silos are comfortable ➜ echo chamber effect

- Lack of diversity ➜ polarization ➜ misinformation

- Misinformation shapes opinion before correction

- Facilitation complexity does not scale linearly

How do we use LLMs to improve comment moderation to allow public deliberation at scale?

Background

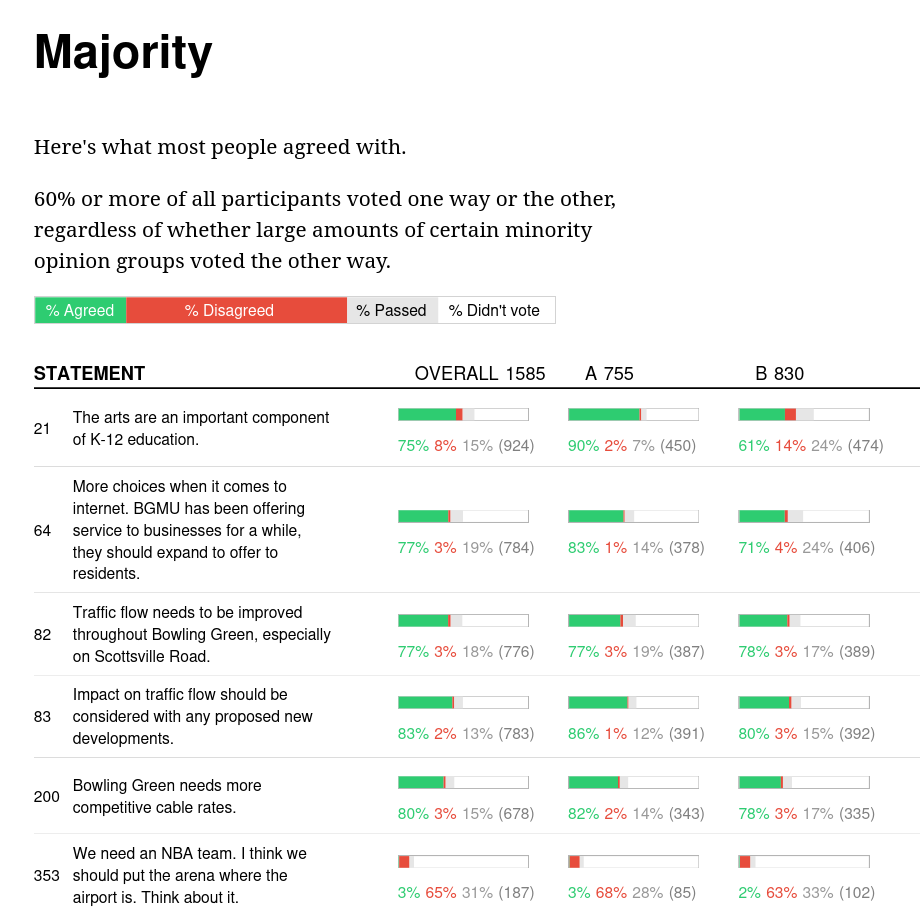

Polis

- “Real-time system for gathering, analyzing and understanding” public opinion

- Developed as an open source platform for public discourse

- Published several case studies

- Participants post short messages and vote on others

- Polis algorithm ensures exposure to diverse opinions

- \(\vec{comments} \times \vec{votes} =\) opinion matrix

- fed into statistical models

- understand where people agree or disagree

Human Moderation in Polis

- Near-real-time feedback (agree, disagree, neutral)

- Loosely follow Polis moderation guidelines

- Spam: devoid of relevance to the discussion.

- Duplicative: restating a previously made point.

- Complex: articulating multiple ideas or problems.

- Each organization has its own methodology

- Process is time consuming and inconsistent

Large Language Models

- Extremely good at pattern recognition and summarization

- Generate appealing content

- Reasoning for small tasks

- Use for simple decision-making

Research Questions

- How effective are LLMs for content moderation on Polis?

- How do various prompting strategies perform against human moderation?

- Can LLMs effectively augment or entirely replace human moderators?

Methodology

Data

- Summary Statistics: conversation topic, number of participants, total comments, total votes

- Comments: author, comment text, moderated, agree votes, disagree votes

- Votes: voter ID, comment ID, timestamp, vote

- Participant-Vote Matrix: participant ID, group ID, n-votes, n-agree, n-disagree, comment ID…

- Stats History: votes, comments, visitors, voters, commenters

| Dataset | Participants | Comments | Accepted |

|---|---|---|---|

| american-assembly.bowling-green | 2031 | 896 | 607 |

| scoop-hivemind.biodiversity | 536 | 314 | 154 |

| scoop-hivemind.taxes | 334 | 148 | 91 |

| scoop-hivemind.affordable-housing | 381 | 165 | 119 |

| scoop-hivemind.freshwater | 117 | 80 | 51 |

| scoop-hivemind.ubi | 234 | 78 | 71 |

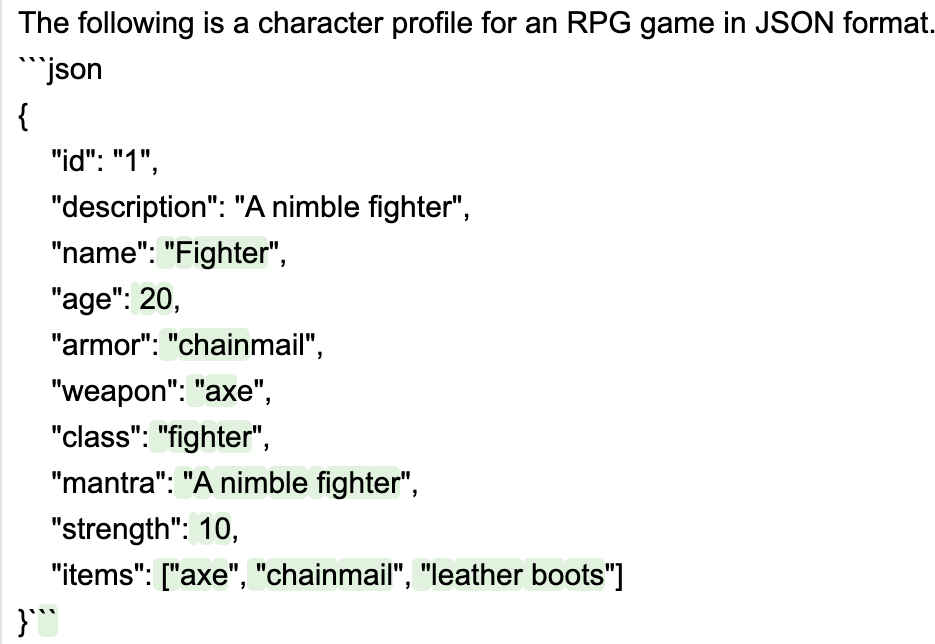

Text Generation

- Guidance

- Python framework from Microsoft Research

- Precisely control generation

- Interleave control and generation seamlessly

- Models Considered

Experimental Configurations

| Config | Target Classes | Examples | Deconstruction | CoT Technique |

|---|---|---|---|---|

| 1 | 3 | No | No | N/A |

| 2 | 3 | Yes | No | N/A |

| 3 | 3 | No | No | Thought after rejection |

| 4 | 3 | Yes | No | Thought after rejection |

| 5 | 7 | No | No | N/A |

| 6 | 7 | No | No | Thought before decision |

| 7 | 7 | No | Yes | Thought before decision |

| 8 | 7 | No | Yes | N/A |

| 9 | 3 | No | Yes | Thought before decision |

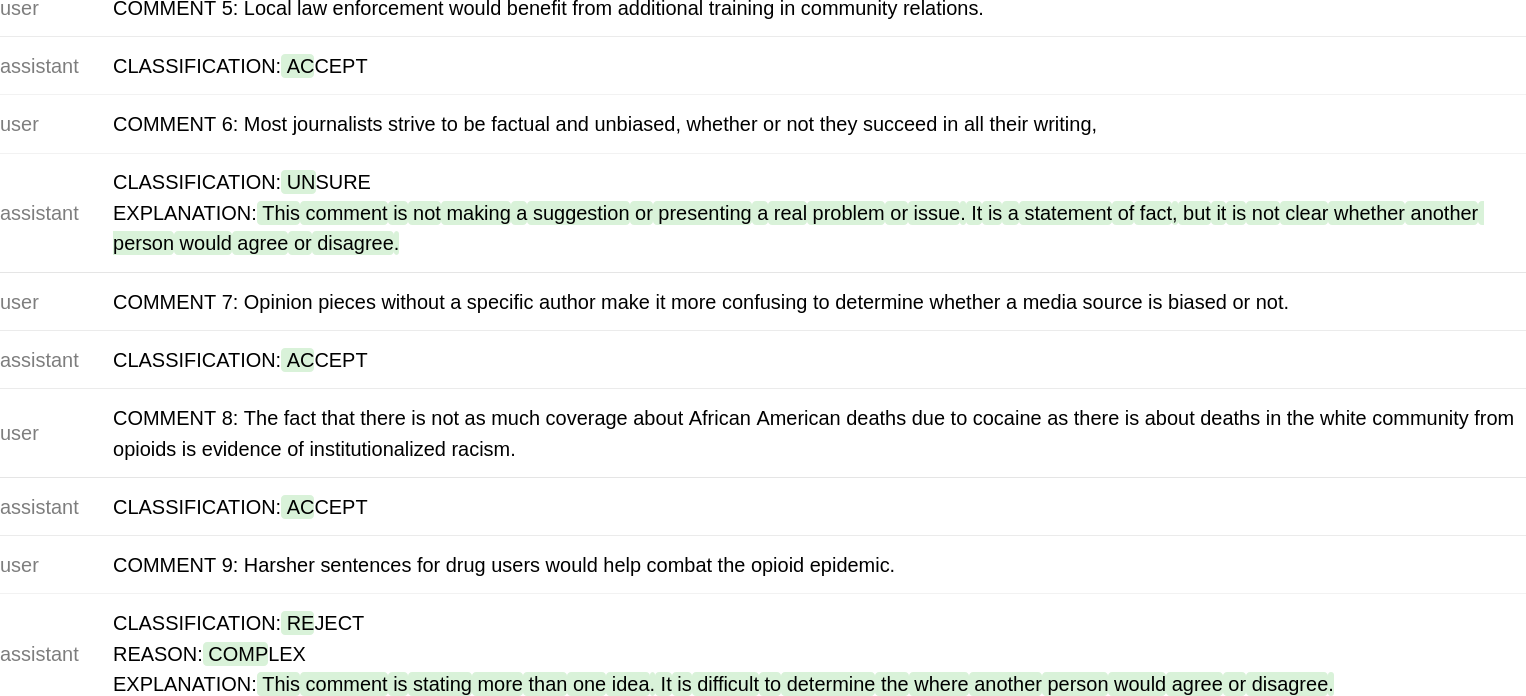

Instructions: Three-Class Classification

Discussion Title: Improving Bowling Green / Warren County

Discussion Question: What do you believe should change in Bowling Green/Warren County in order to make it a better place to live, work and spend time?

---

You will be presented with comments posted on Polis discussion platform.

Classify each comment objectively based on whether it meets the given guidelines.

---

Classifications:

- ACCEPT: Comment is coherent, makes a suggestion, or presents a real problem or issue.

- UNSURE: Unclear whether the comment meets the guidelines for ACCEPT.

- REJECT: Comment should definitely be rejected for one of the reasons listed below.

---

Reasons for REJECT:

- SPAM: Comments which are spam and add nothing to the discussion.

- COMPLEX: Comments which state more than one idea. It is difficult to determine the where another person would agree or disagree.

---

Output format:

CLASSIFICATION: One of the following based on given guidelines: ACCEPT, UNSURE, REJECT.

THOUGHT: Express the reasoning for REJECT classification.

Am I certain: Answer with YES or NO. If unsure, state NO.

REASON: One of the following based on given guidelines: SPAM, COMPLEX

EXPLANATION: Provide an explanation for why the comment was classified as REJECT.Output: Three-Class Classification

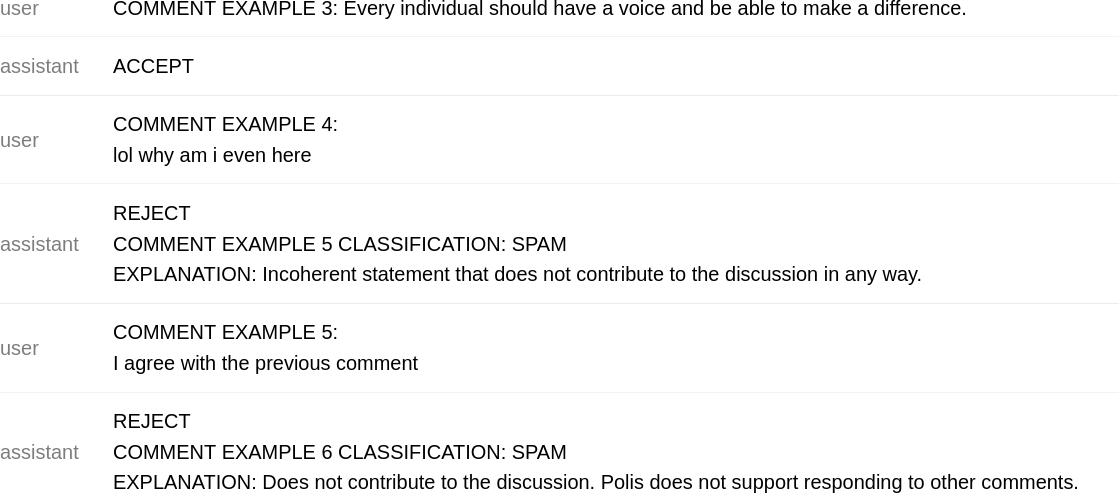

Use of Examples

Second-Thought Technique

- False Positives cause more harm

- Allow the model to turn a REJECT into UNSURE

Instructions: Seven-Class Classification

Discussion Title: Improving Bowling Green / Warren County

Discussion Question: What do you believe should change in Bowling Green/Warren County in order to make it a better place to live, work and spend time?

---

Classify each comment objectively based on the following guidelines:

- ACCEPT: mentions a real problem related to the discussion.

- ACCEPT: recommends a realistic and actionable solution related to the discussion.

- ACCEPT: makes a sincere suggestion related to the discussion.

- IRRELEVANT: frivolous, irrelevant, unrelated to the discussion.

- IRRELEVANT: does not contribute to the discussion in a meaningful way.

- SPAM: incoherent or lacks seriousness.

- SPAM: provides neither a problem nor a solution.

- UNPROFESSIONAL: the language is informal, colloquial, disrespectful or distasteful.

- SCOPE: cannot be addressed within the scope of original question.

- COMPLEX: introduces multiple ideas, even if they are related to the discussion.

- COMPLEX: discusses distinct problems, making it difficult to determine where another person would agree or disagree.

- UNSURE: may be accepted if it appears somewhat related to the discussion.

---

Output format:

CLASSIFICATION: One of the following based on given guidelines: ACCEPT, UNSURE, SPAM, IRRELEVANT, UNPROFESSIONAL, SCOPE, COMPLEX.

EXPLANATION: Provide an explanation for the classification.Instructions: Comment Deconstruction

Output format:

PROBLEM: The specific problem mentioned in the comment. If only an action is suggested and no problem is explicitly mentioned, state None.

ACTION: What suggestion or change is proposed. If only a problem is mentioned and no action is suggested, state None.

HOW MANY IDEAS: Number of distinct ideas introduced in the comment.

THOUGHT: Deliberate about how the comment should be classified.

CLASSIFICATION: ACCEPT, UNSURE, SPAM, COMPLEX.

REASON: If comment was not classified as ACCEPT, explain.Output: Comment Deconstruction and Thought Statements

Results

Comment Moderation

- Accuracy generally the same

- Unsure rate increases with complexity of task

- Deconstruction reduces false positive rate

- CoT not as effective as deconstruction

- Examples must be specific to dataset

Configurations

- 1: Baseline

- 2: Examples

- 3: Thought

- 4: Thought + Examples

- 5: 7-class Baseline

- 6: Thought

- 7: Thought + Deconstruction

- 8: Deconstruction

- 9: Deconstruction, 3-class

Conclusion

LLMs in structuring online debates

- Potential of LLMs for simple tasks

- Risk of hallucinations and incorrect output

- Chaining simple tasks for complex reasoning

- Augmenting vs replacing human moderation processes

- LLMs’ limitations in processing complex instructions and sentences

- Complex instructions

- Relationship modeling based on double and triple negatives

- Reliability and bias

- Critical need for ethical and inclusive technology deployment

Future Research Directions

- Semantic extraction and reasoning during discourse

- Exploring connections across topics

- Individual feedback before posting a comment

- Generalizing techniques to platforms like Kialo, Hacker News

Comment Moderation